9/9/2024 M. Washington (Admin)

Import Data Fast into Fabric Using Parquet Files

To import data efficiently into Microsoft Fabric you want to use parquet files. You can leverage C# and Microsoft Blazor for a streamlined process. By utilizing the powerful Parquet.Net library in C#, you can quickly write parquet files, enabling you to handle large datasets with ease. This approach not only speeds up data import but also enhances the scalability and performance of your import process.

Traditional Way to Import Data Into Fabric

The standard way to import data into Fabric is:

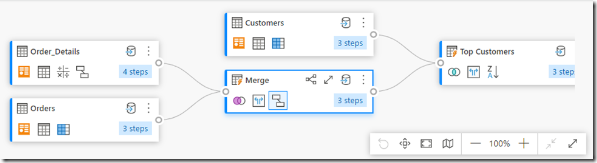

- Data pipelines: Provides a code-free or low-code way to manage data ingestion, allowing you to build complete ETL workflows. You can prepare the destination, run custom SQL, perform lookups, and move data between sources and destinations.

- Dataflows: Are a simpler alternative focused on easy data preparation, cleaning, and transformation without needing any code.

Here are the Problems:

- When something goes wrong you're stuck

- There is no step-by-step debugging. You have to diagnose problems by examining the logs but these are often missing important information to understand exactly what is going wrong.

- It is not very fast

- Difficult to learn and hard to understand

- Complex solutions created by others, and sometimes yourself, require a lot of clicking and drilling down into various settings to understand what a pipeline is doing

A Fast Way to Import Data Into Fabric

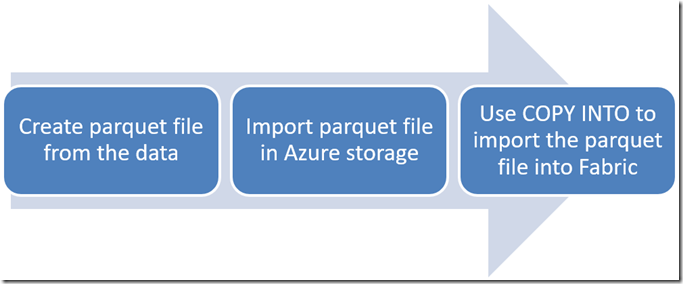

This article proposes using C# and Microsoft Blazor to import data instead. It uses this process:

- Create parquet file

- Parquet is a the native format of data in the Fabric warehouse. However, it is being used in this instance as a way to compress the data into a format that is easily imported into Fabric

- Import Parquet file into Azure Storage

- The parquet file must be placed in a location that allows the TSQL statement: COPY INTO to import the data. Azure Storage provides such a location. a SAS token will be created to allow Fabric the authentication to access the parquet file

- Use COPY INTO to import the parquet file into Fabric

- This TSQL statement can import a parquet file that has a million rows in seconds.

Sample Code

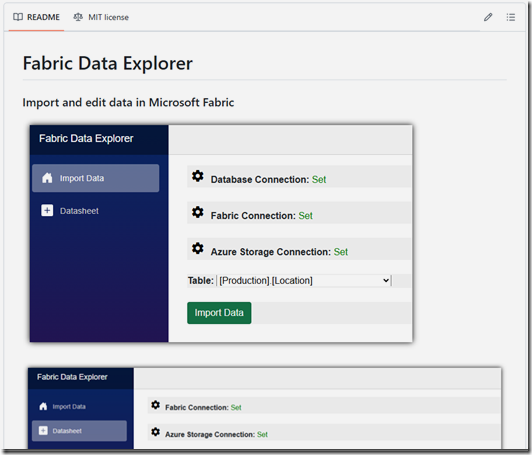

You can find the code for connecting to Microsoft Fabric, using Microsoft Blazor, in the Github project located at: https://github.com/ADefWebserver/FabricDataExplorer.

This project will allow you to import data from a SQL server into a Fabric Data Warehouse.

Walk-Thru

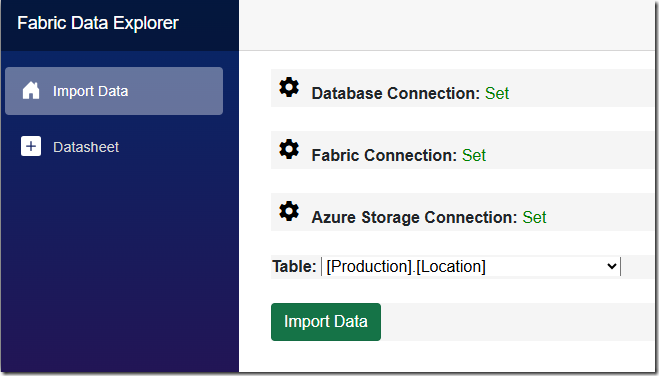

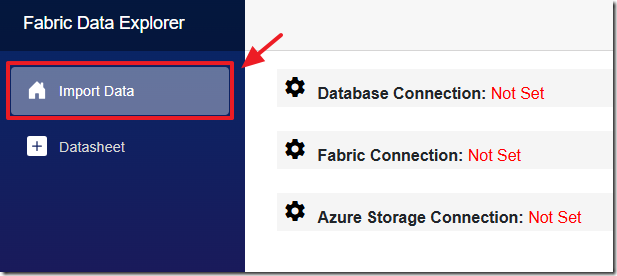

When you download and run the project at: https://github.com/ADefWebserver/FabricDataExplorer navigate to the Import Data tab.

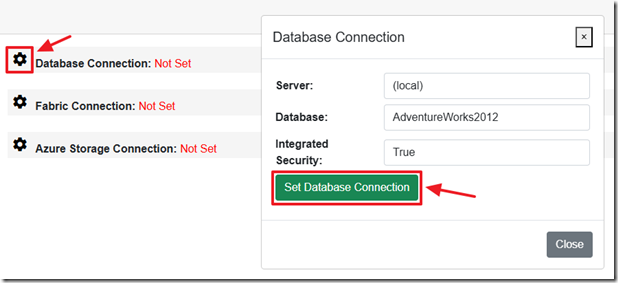

Click the Gear icon next to Database Connection to open the Database Connection dialog.

Connect to any SQL database that can be reached by the Blazor application and click the Set Database Connection button.

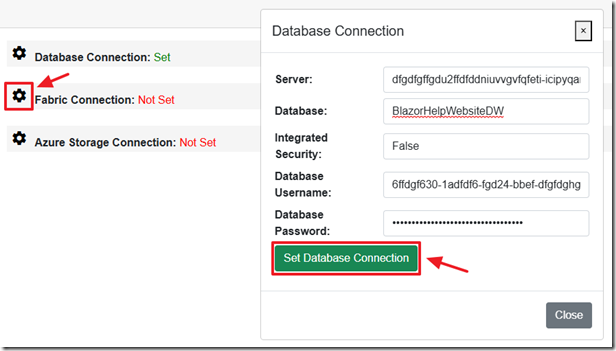

Click the Gear icon next to Database Connection to open the Database Connection dialog.

Connect to any Fabric Data Warehouse that can be reached by the Blazor application and click the Set Database Connection button.

Note: See the following article: Connect to Microsoft Fabric Using TSQL and Microsoft Blazor for instructions on connecting to Fabric using TSQL.

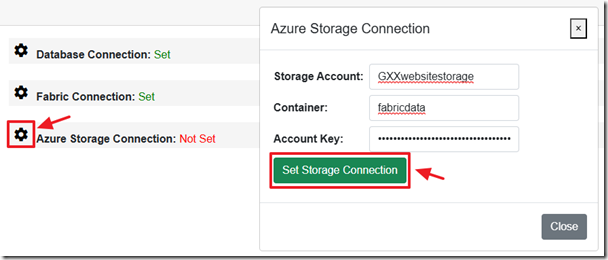

Click the Gear icon next to Azure Storage Connection to open the Azure Storage Connection dialog.

Enter the connection information and click the Set Storage Connection button.

Note: See https://learn.microsoft.com/en-us/azure/storage/blobs/storage-quickstart-blobs-portal for assistance on creating Azure Storage containers.

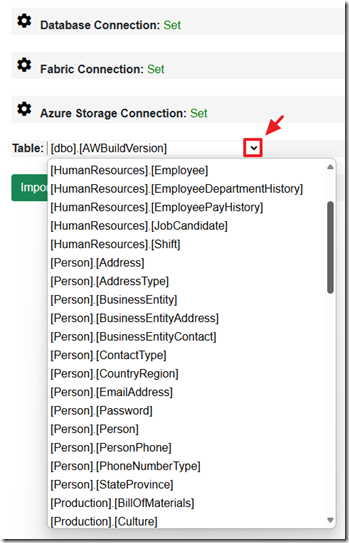

The tables in the SQL database will display.

Select a table to import.

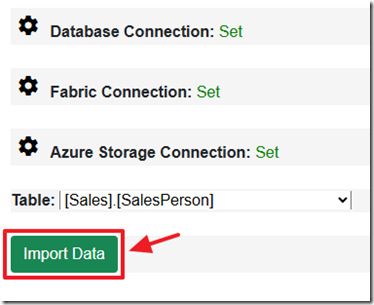

Once a table has been selected, click the Import Data button.

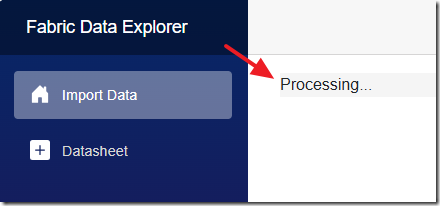

The import process will begin.

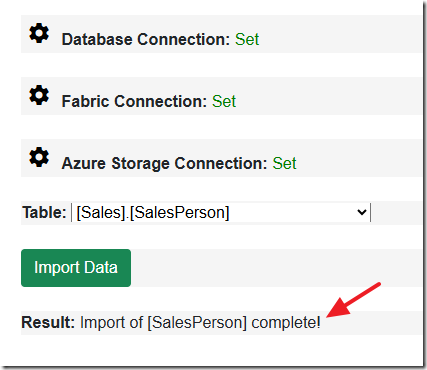

When complete, the confirmation result will display.

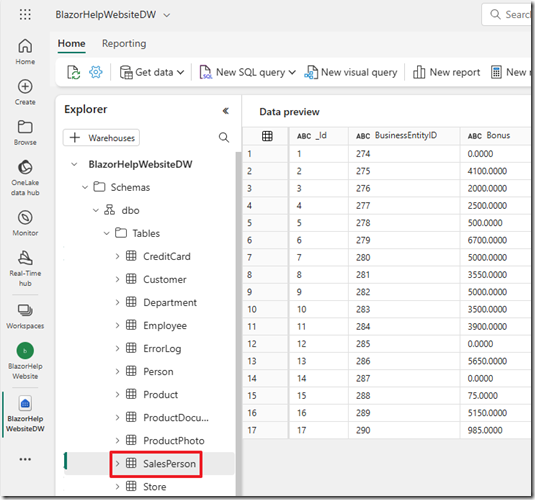

You can verify the data has been imported in the Fabric website.

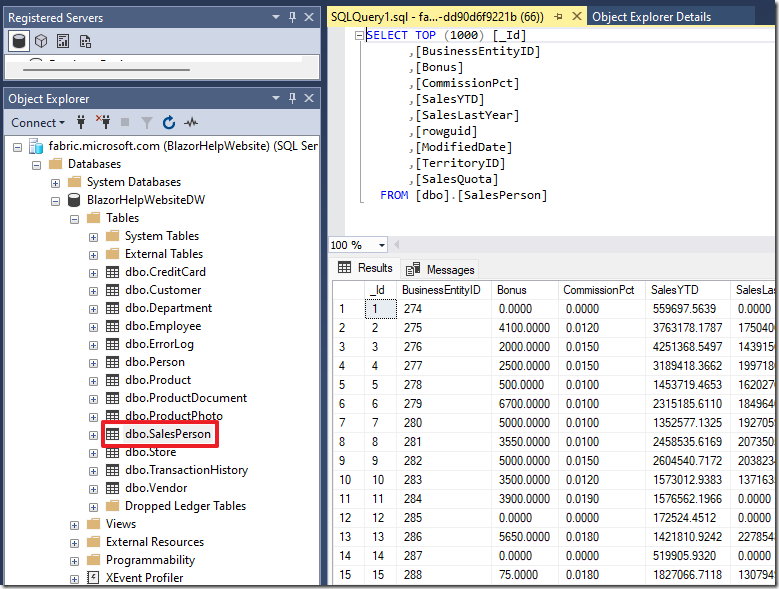

You can also verify the data has been imported using the Microsoft SQL Server Management Studio.

Note: See the following article: Connect to Microsoft Fabric Using TSQL and Microsoft Blazor for instructions on connecting to Fabric using Microsoft SQL Server Management Studio.

The Application Code

When the Import Data button is pressed, the ImportDataIntoFabric() method is called that retrieves the column names for the selected table from a SQL database:

using (SqlConnection connection = new SqlConnection(DatabaseConnectionString)){connection.Open();// Get the column names and wrap them in square brackets// Get a list of the column namesList<string> columns = new List<string>();DataTable Columns = connection.GetSchema("Columns");foreach (DataRow row in Columns.Rows){if ($"[{row["TABLE_SCHEMA"].ToString()}].[{row["TABLE_NAME"].ToString()}]" == selectedTable){columns.Add($"[{row["COLUMN_NAME"].ToString()}]");}}connection.Close();

The code then selects all columns from the selected table and adds a new column called _Id using the ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) function. This column is used to generate unique IDs for each row.

// Use columns to create the SQL Querystring columnString = string.Join(",", columns);string sqlQuery = $"SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) AS _Id,{columnString} FROM {selectedTable}";

The code then creates an instance of the ImportSQLIntoFabric class, which is responsible for importing data into Fabric. It sets various properties of the importIntoFabric object, including the SQL query, table name (after removing the schema), Fabric connection string, SQL connection string, and Azure storage account details.

The code then calls the ImportData() method of the importIntoFabric object, which performs the actual data import process. This method returns a message indicating the result of the import.

// Call ImportSQLIntoFabricImportSQLIntoFabric importIntoFabric = new ImportSQLIntoFabric();importIntoFabric.SqlQuery = sqlQuery;importIntoFabric.TableName = RemoveSchema(selectedTable);importIntoFabric.DataWarehouseConnectionString = FabricConnectionString;importIntoFabric.SQLConnectionString = DatabaseConnectionString;importIntoFabric.StorageAccountName = StorageAccountName;importIntoFabric.StorageContainer = ContainerName;importIntoFabric.StorageAccountKey = AccountKey;Message = await importIntoFabric.ImportData();

The ImportSQLIntoFabric Class

This code defines a class ImportSQLIntoFabric that facilitates the process of importing data from an SQL Server database into the Fabric Data Warehouse through Azure Blob Storage using Parquet format.

The main method ImportData executes a SQL query, extracts data fields, and transforms the result set into a Parquet table.

var databaseFields =ExtractFields(SqlQuery);var parquetSchema =new ParquetSchema(databaseFields.Select(f => new DataField<string>(f)).ToList());var parquetTable =new Parquet.Rows.Table(parquetSchema);using (var connection = new SqlConnection(SQLConnectionString)){connection.Open();using (var command = new SqlCommand(SqlQuery, connection))using (var reader = command.ExecuteReader()){while (reader.Read()){var row = new Row(databaseFields.Select((f, i) => CleanValue(reader.GetValue(i)?.ToString())).ToArray());parquetTable.Add(row);}}}

It then writes the table into an in-memory stream and uploads it to an Azure Blob Storage container.

using var ms = new MemoryStream();await parquetTable.WriteAsync(ms);ms.Position = 0;var blobClient = new BlobContainerClient($"DefaultEndpointsProtocol=https;" +$"AccountName={StorageAccountName};" +$"AccountKey={StorageAccountKey};" +$"EndpointSuffix=core.windows.net",StorageContainer);string fileName = Path.GetFileName(TableName) + ".parquet";var blob = blobClient.GetBlobClient($"{fileName}");await blob.UploadAsync(ms, true);await Task.Delay(5000);

After uploading the data, a SAS (Shared Access Signature) token is generated, which allows access to the blob with limited permissions.

private string GenerateSasToken(){var sasBuilder = new AccountSasBuilder{Protocol = SasProtocol.Https,Services = AccountSasServices.Blobs,ResourceTypes = AccountSasResourceTypes.Container | AccountSasResourceTypes.Object,ExpiresOn = DateTimeOffset.UtcNow.AddHours(1)};sasBuilder.SetPermissions(AccountSasPermissions.Read | AccountSasPermissions.Write);return sasBuilder.ToSasQueryParameters(new StorageSharedKeyCredential(StorageAccountName,StorageAccountKey)).ToString();}

This SAS token is used to construct a list of SQL scripts that create the necessary table schema in the Fabric Data Warehouse.

The method GenerateSqlScripts constructs SQL commands for creating the table, adding a primary key, and performing a bulk data load using the COPY INTO command.

private List<string> GenerateSqlScripts(string tableName, IList<string> fields, string sasToken){var fieldSchema =string.Join(", ", fields.Select(f => $"[{f}] VARCHAR(4000)"));var filePath =$"https://{StorageAccountName}.blob.core.windows.net/{StorageContainer}/{tableName}.parquet";return new List<string>{$"IF OBJECT_ID('[dbo].{tableName}', 'U') IS NOT NULL DROP TABLE dbo.{tableName}",AddNotNULL($"CREATE TABLE [dbo].{tableName} ({fieldSchema})"),$"ALTER TABLE [dbo].{tableName} ADD CONSTRAINT PK_{StripBrackets(tableName)} PRIMARY KEY NONCLUSTERED (_Id) NOT ENFORCED;",$"COPY INTO [dbo].{tableName} " +$"FROM '{filePath}' " +$"WITH (file_type = 'PARQUET', CREDENTIAL=(IDENTITY= 'Shared Access Signature', SECRET='{sasToken}'))"};}

Finally the scripts are executed to import the data.

using (var connection = new SqlConnection(DataWarehouseConnectionString)){connection.Open();foreach (var sql in sqlScripts){using var command = new SqlCommand(sql, connection);await command.ExecuteNonQueryAsync();await Task.Delay(5000); // To ensure operations are sequenced properly}}

Links

FabricDataExplorer (Github - Source code)

Connect to Microsoft Fabric Using TSQL and Microsoft Blazor

https://github.com/aloneguid/parquet-dotnet

Microsoft Entra authentication in Synapse Data Warehouse - Microsoft Fabric | Microsoft Learn

https://learn.microsoft.com/en-us/fabric/data-warehouse/ingest-data

https://learn.microsoft.com/en-us/fabric/enterprise/buy-subscription

BlazorData.Net

BlazorData.Net